Dimensionality reduction¶

fMRI analysis often has a dimensionality problem: we get approximately 100,000 voxels (i.e., features) per volume, but only 100s of time points or trials (i.e., examples). This makes it very hard for machine learning algorithms to model how each voxel contributes. For more general information on this problem, also dubbed the curse of dimensionality, see these slides from the Texas A&M University Computer Science and Engineering Department. For a neuroimaging-specific view on the curse of dimensionality, you might want to take a look at Mwangi et al.'s Neuroinformatics review from 2014.

In this notebook we are going to learn various methods that can help us reduce the dimensionality of fMRI data.

Goal of this script¶

- Learn to compute the covariance of a dataset.

- Reduce the feature space using principal component analysis (PCA).

- Interpret the meaning of PCA components.

- Perform feature selection using cross-validation.

Pre-requisties¶

You should be familiar with the functions in the data loading and classification notebooks.

Table of Contents¶

3.1 Plot PCA

3.2 Scree Plots

3.3 Interpreting Components

3.4 Normalization

3.5 PCA dimensionality reduction and classification

4.1 Feature Selection: Pipelines

4.2 Feature Selection: Univariate

Exercises

Dataset: For this script we will use a localizer dataset from Kim et al. (2017) again. Just to recap: The localizer consisted of 3 runs with 5 blocks of each category (faces, scenes and objects) per run. Each block was presented for 15s. Within a block, a stimulus was presented every 1.5s (1 TR). Between blocks, there was 15s (10 TRs) of fixation. Each run was 310 TRs. In the matlab stimulus file, the first row codes for the stimulus category for each trial (1 = Faces, 2 = Scenes, 3 = Objects). The 3rd row contains the time (in seconds, relative to the start of the run) when the stimulus was presented for each trial.

import warnings

import sys

if not sys.warnoptions:

warnings.simplefilter('ignore')

# Import neuroimaging, analysis and general libraries

import numpy as np

from time import time

import pandas as pd

# Import plotting libraries

import matplotlib.pyplot as plt

import seaborn as sns

# Machine learning libraries

from sklearn.model_selection import cross_val_score, cross_validate, PredefinedSplit

from sklearn.svm import SVC

from sklearn.decomposition import PCA

from sklearn.feature_selection import SelectKBest, RFECV, f_classif

from sklearn.pipeline import Pipeline

%matplotlib inline

%autosave 5

sns.set(style = 'white', context='poster', rc={'lines.linewidth': 2.5})

sns.set(palette="colorblind")

# load some helper functions

from utils import load_labels, load_data, blockwise_sampling, label2TR, shift_timing, reshape_data

from utils import normalize, decode

# load some constants

from utils import vdc_data_dir, vdc_all_ROIs, vdc_label_dict, vdc_n_runs, vdc_hrf_lag, vdc_TR, vdc_TRs_run

print('Here\'re some constants, which is specific for VDC data:')

print('data dir = %s' % (vdc_data_dir))

print('ROIs = %s' % (vdc_all_ROIs))

print('Labels = %s' % (vdc_label_dict))

print('number of runs = %s' % (vdc_n_runs))

print('1 TR = %.2f sec' % (vdc_TR))

print('HRF lag = %.2f sec' % (vdc_hrf_lag))

print('num TRs per run = %d' % (vdc_TRs_run))

1. Load the data ¶

Load the data for one participant using these helper functions.

sub_id = 1

mask_name = 'FFA' # This is set in order to reduce memory demands in order to run within 4Gb, however, if you want to make this run on whole brain, then set this to ''

# Specify the subject name

sub = 'sub-%.2d' % (sub_id)

# Convert the shift into TRs

shift_size = int(vdc_hrf_lag / vdc_TR)

# Load subject labels

stim_label_allruns = load_labels(vdc_data_dir, sub)

# Load run_ids

run_ids_raw = stim_label_allruns[5,:] - 1

# Load the fMRI data using a mask

epi_mask_data_all = load_data(vdc_data_dir, sub, mask_name=mask_name)[0]

# This can differ per participant

print(sub, '= TRs: ', epi_mask_data_all.shape[1], '; Voxels: ', epi_mask_data_all.shape[0])

TRs_run = int(epi_mask_data_all.shape[1] / vdc_n_runs)

# Convert the timing into TR indexes

stim_label_TR = label2TR(stim_label_allruns, vdc_n_runs, vdc_TR, TRs_run)

# Shift the data some amount

stim_label_TR_shifted = shift_timing(stim_label_TR, shift_size)

# Perform the reshaping of the data

bold_data_raw, labels_raw = reshape_data(stim_label_TR_shifted, epi_mask_data_all)

# Normalize raw data within each run

bold_normalized_raw = normalize(bold_data_raw, run_ids_raw)

# Down sample the data to be blockwise rather than trialwise.

#We'll use the blockwise data for all the

bold_data, labels, run_ids = blockwise_sampling(bold_data_raw, labels_raw, run_ids_raw)

# Normalize blockwise data within each run

bold_normalized = normalize(bold_data, run_ids)

Previously, we have been using data from each trial. Within each block, the voxel activity is correlated across trials. Thus, it is common (and probably better) to take the average value of the activity within a block as your observation in decoding analyses in order to avoid concerns about non-independence. Mean values of activity or beta coefficients (from GLM) are commonly used in the literature.

Self-study: We introduce a simple kind of debugging here, as we print both the number of expected and resampled blocks (resampled refers to the conversion from trialwise data to blockwise data). Thus, if something went wrong, we would be able to spot it the output. Learn about more ways of debugging your code by using assertions here.

2. Covariance ¶

As a precursor to understanding dimensionality reduction techniques, we need to learn how to compute the covariance matrix because it is often used in these methods.

By default, we used an FFA mask to reduce the memory demands in this notebook, but if possible we recommend that you use no mask in order to grapple with the memory issues of working with wholebrain data. There are nearly 1 million voxels in every volume we acquire, of which about 15% are in the brain. The data matrix of >100,000 voxels and <1000 time points is very large, making any computations on all of this data very intensive.

The covariance of two variables is calculated as follows: $$ Cov(X,Y) = \frac{\sum_{1}^{N}(X-\bar{X})(Y-\bar{Y})}{(N-1)}$$ where $\mbox{ } \bar{X} = mean(X), \mbox{ } \bar{Y} = mean(Y), \mbox{ } N = \mbox{number of samples } $

In fMRI, X and Y could be time-series data for two voxels (two columns in our time by voxels data matrix) or the pattern across voxels for two different time points (two rows in the data matrix). The choice of vectors depends on the application.

Exercise 1: Compute the covariance between two blocks (i.e., their averaged patterns across voxels). The steps to do this are outlined below. You could just use a function but we want you to code the individual steps as described (refer here for additional help)

# Enter your code here

# Compute the mean of one row of the block-averaged bold data called: X

# Compute the mean of any other row of the block-averaged bold data called: Y

# Compute the differences of individual voxel values in these rows from the corresponding mean for X or Y.

# Compute the pointwise product of the difference vectors across the two rows.

# Sum over the products of the differences.

# Complete the covariance calculation with these values.

# Compare your result to the answer obtained with np.cov(X,Y)

Covariance is dependent on the unit and scale of the measurement. Its value is thus not easily interpretable or comparable across datasets -- e.g. is there a strong relationship between X and Y if the covariance is 200 as compared to 2 or 2000?

Correlation solves this problem by normalizing the range of the covariance from -1 to +1.

$$ Corr(X,Y) = \frac{Cov(X,Y)}{\sqrt{\frac{\sum_{1}^{N}(X-\bar{X})^2}{(N-1)}}\sqrt{\frac{\sum_{1}^{N}(Y-\bar{Y})^2}{(N-1)}}}$$Exercise 2: Compute the correlation between all pairs of blocks manually (one pair at a time) and compare the result with a numpy function that calculates the block-by-block correlation matrix in one step.

# Compute the correlation manually

# Now with a function

# Insert your code here.

# Subselect 100 voxels from bold_data into a matrix.

# Use np.cov() to compute the covariance of this matrix.

3. PCA ¶

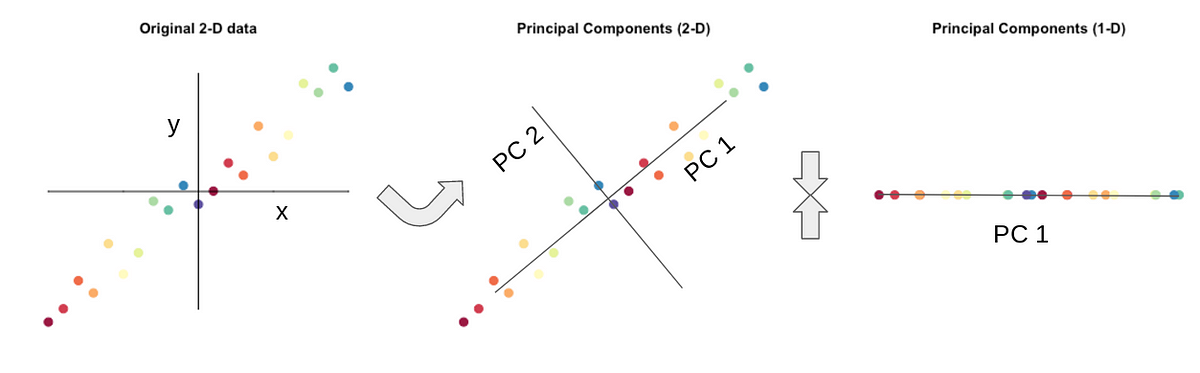

We will use principal component analysis (PCA) to reduce the dimensionality of the data. Some voxels may contain correlated information or no information and so the original voxel-dimensional data matrix (time-by-voxels) can be projected into a lower-dimensional "component" matrix space (time-by-component) without losing much information.

# We now use the PCA function in scikit-learn to reduce the dimensionality of the data

# The number of components was chosen arbitrarily.

pca = PCA(n_components=20)

bold_pca = pca.fit_transform(bold_data)

print('Original data shape:', bold_data.shape)

print('PCA data shape:', bold_pca.shape)

3.1 Plot PCA ¶

Let's visualize the variance in the data along different component dimensions.

# Setting plotting parameter

n_bins=75

# Plot

n_plots = 4

components_to_plot = [0,1,2,19]

f, axes = plt.subplots(1, n_plots, figsize=(14, 14/n_plots))

st=f.suptitle("Figure 3.1. Histogram of values for each PC dimension ", fontsize="x-large")

for i in range(n_plots):

axes[i].hist(bold_pca[:, components_to_plot[i]],

bins=n_bins)

# mark the plots

axes[i].set_title('PC Dimension %d'%(components_to_plot[i]+1))

axes[i].set_ylabel('Frequency')

axes[i].set_xlabel('Value')

axes[i].set_xticks([])

axes[i].set_yticks([])

f.tight_layout()

st.set_y(0.95)

f.subplots_adjust(top=0.75)

Let's visualize the relationship between variances across pairs of components.

"""

Plot the low dim representation of the bold data

"""

# Setting plotting parameters

alpha_val = .8

cur_pals = sns.color_palette('colorblind', n_colors=vdc_n_runs)

# Plot

n_plots = 3

f, axes = plt.subplots(1, n_plots, figsize=(14,5))

st=f.suptitle("Figure 3.2. Scatter plots comparing PCA dimensions ", fontsize="x-large")

# plot data

axes[0].scatter(bold_pca[:, 0], bold_pca[:, 1],

alpha=alpha_val, marker='.', color = 'k')

axes[1].scatter(bold_pca[:, 2], bold_pca[:, 3],

alpha=alpha_val, marker='.', color = 'k')

axes[2].scatter(bold_pca[:, 18], bold_pca[:, 19],

alpha=alpha_val, marker='.', color = 'k')

axes[0].set_title('PCA Dimensions\n1 x 2')

axes[1].set_title('PCA Dimensions\n3 x 4')

axes[2].set_title('PCA Dimensions\n18 x 19')

# modifications that are common to all plots

for i in range(n_plots):

axes[i].axis('equal')

axes[i].set_xticks([])

axes[i].set_yticks([])

f.tight_layout()

st.set_y(0.95)

f.subplots_adjust(top=0.75)

3.2 Scree plots ¶

A "scree" plot can depict the amount of variance in the original data that is explained by each component.

Exercise 4: Make a scree plot for the PCA above. How many components would be sufficient to account for most of the variance?

# Plot the scree plot

A:

3.3 Interpreting Components ¶

From the previous plot of the first and second PCA dimension, you can see you have three clusters. You might assume that they correspond to faces, scenes, and objects.

Exercise 5: Determine what the three clusters correspond to. First, create a new scatter plot of these two components and mark (e.g., in different symbols or colors) each point on the plot by visual category. Then, create a second scatter plot with points labeled in a way that better corresponds to the clusters (complete this exercise before reading further). (Hint: What else was there three of?)

# Put answer

3.4 Normalization ¶

We ran the PCA analysis without normalizing the data.

Exercise 6: Using the variable bold_normalized re-compute the PCA (components=20). Plot the results with a scatter plot like Figure 3.2. What was the effect of normalization and why is this useful?

# Insert code here

3.5 PCA dimensionality reduction and classification ¶

As mentioned earlier, we use PCA to reduce the dimensionality of the data and thus minimize the 'curse of dimensionality'. Below we explore how PCA affects classification accuracy.

# Run a basic n-fold classification

# Get baseline, whole-brain decoding accuracy without PCA

print('Baseline classification')

print('Original size: ', bold_normalized.shape)

svc = SVC(kernel="linear", C=1)

start = time()

models, scores = decode(bold_normalized, labels, run_ids, svc)

end = time()

print('Accuracy: ', scores)

print('Run time: %0.4fs' %(end - start))

# Run the classifier on data in component space

pca = PCA(n_components=20)

bold_pca_normalized = pca.fit_transform(bold_normalized)

print('PCA (c=%d) classification' % bold_pca_normalized.shape[1])

print('New size after PCA: ', bold_pca_normalized.shape)

start = time()

models_pca, scores_pca = decode(bold_pca_normalized, labels, run_ids, svc)

end = time()

print('Accuracy: ', scores_pca)

print('Run time: %0.4fs' %(end - start))

In this case PCA does not improve decoding accuracy. However, note that similar performance was achieved with 20 vs. 177,314 features, that the analysis ran 500x faster, and that the resulting model is likely to generalize better to new data (e.g., from a different subject).

# Insert code

3.5.1 The PCA Challenge ¶

Exercise 8: Given that some of the early PCA dimensions may not be cognitively relevant, determine the smallest number of PCA components from which you can get the highest decoding accuracy.

4. Feature selection using cross-validation ¶

When we took a few PCA components instead of all voxels, we were performing feature selection. Feature selection is used to reduce noise and increase computational speed. However, a problem with the approach above is that feature selection is applied to all data (prior to division into training and test sets) and is thus a kind of double dipping.

A better way to select features is during cross-validation. In this case, feature selection is only performed on the training set, and the same features are used on the test data. This way the classifier never sees the test data during training.

We will perform feature selection during cross-validation in this section. The Pipelines method in scikit-learn provides an easy interface to perform these steps and we will use it extensively.

4.1 Pipelines: Feature selection with cross-validation ¶

The scikit-learn has a method, Pipeline, that simplifies running a sequence of steps in an automated fashion. Below we create a pipeline with the following steps:

Perform dimensionality reduction.

Run an SVM.

To do this systematically during cross-validation, we will embed Pipeline in the cross_validate method in scikit-learn.

The steps below are based on this example in scikit-learn.

# Example:

# Set up the pipeline

pipe = Pipeline([

('reduce_dim', PCA(n_components=20)),

('classify', SVC(kernel="linear", C=1)),

])

# Run the pipeline with cross-validation

ps = PredefinedSplit(run_ids) # Provides train/test indices to split data in train/test sets

clf_pipe = cross_validate(

pipe,bold_normalized,labels,cv=ps,

return_train_score=True

)

# Print results from this dimensionality reduction technique

print(clf_pipe)

print ("Average Testing Accuracy: %0.2f" % (np.mean(clf_pipe['test_score'])))

Print out the data indices that were used for training and testing. Ensure that they are different for each fold.

# Print train/test split

for cv_idx ,(train_index, test_index) in enumerate(ps.split(bold_normalized, labels)):

print('CV iteration: %s' % cv_idx)

print('Train_index: ')

print(train_index)

print('Test_index: ')

print(test_index)

# Print results from this dimensionality reduction technique

print(clf_pipe)

print ("Average Testing Accuracy: %0.2f" % (np.mean(clf_pipe['test_score'])))

4.2 Feature selection: Univariate ¶

We can also use a variety of univariate methods to do feature selection in scikit-learn. One commonly used technique is to compute an ANOVA on the data and pick voxels with large F values. The F value measures the ratio of the variance between conditions (signal) to the variance within condition (noise). You can learn more about the ANOVA here: ANOVA F-value. Note that implementing this completely different feature selection approach requires changing only one line in the pipeline, demonstrating the usefulness of this framework.

Exercise 9: Implement the pipeline using ANOVA F-value (imported as f_classif) and the SelectKBest method pick the top 100 voxels with the highest F values.

# Insert code

Novel contribution: be creative and make one new discovery by adding an analysis, visualization, or optimization. This week we encourage you to implement a different feature selection approach.

Contributions ¶

M. Kumar, C. Ellis and N. Turk-Browne produced the initial notebook 02/2018

T. Meissner minor edits and added the ICA section

Q. Lu revise PCA plots, cb colors, code style improvement, leverage exisiting funcs

H. Zhang added pipeline section, use blockwise normalized data, other edits

M. Kumar enhanced section introductions.

K.A. Norman provided suggestions on the overall content and made edits to this notebook.

C. Ellis implemented comments from cmhn-s19